Summary

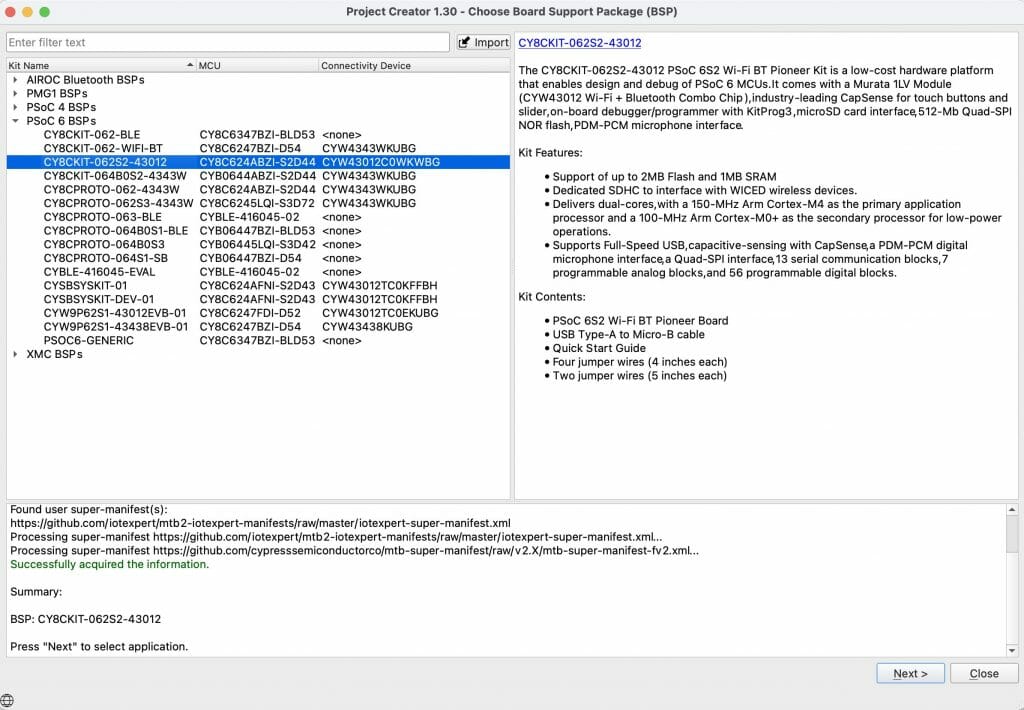

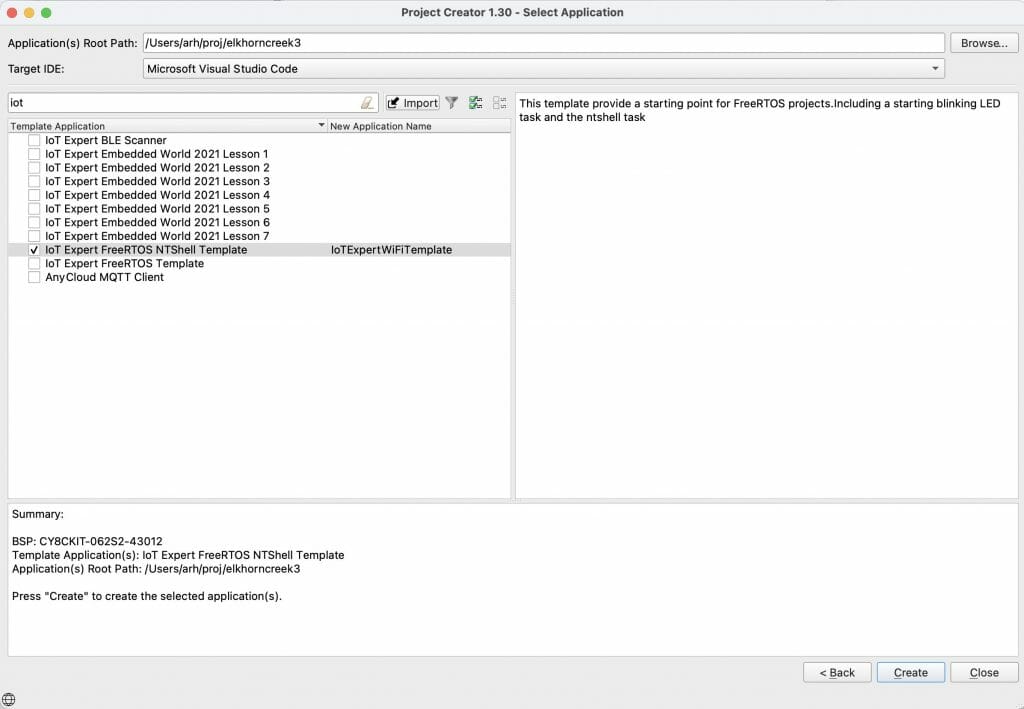

Instructions on using the AnyCloud Wireless Connection manager to enable WiFi. This article is Part 2 of a series that will build a new IoT Expert template project for WiFi.

Article

(Part 1) Create Basic Project & Add Cypress Logging Functionality

(Part 2) Create New Thread to manage WiFi using the Wireless Connection Manager

(Part 3) Create a New Middleware Library with WiFi helper functions

(Part 4) Add WiFi Scan

Add WiFi Connect

Add WiFi Disconnect

Add WiFi Ping

Add Gethostbyname

Add MDNS

Add Status

Add StartAP

Make a new template project (update manifest)

Story

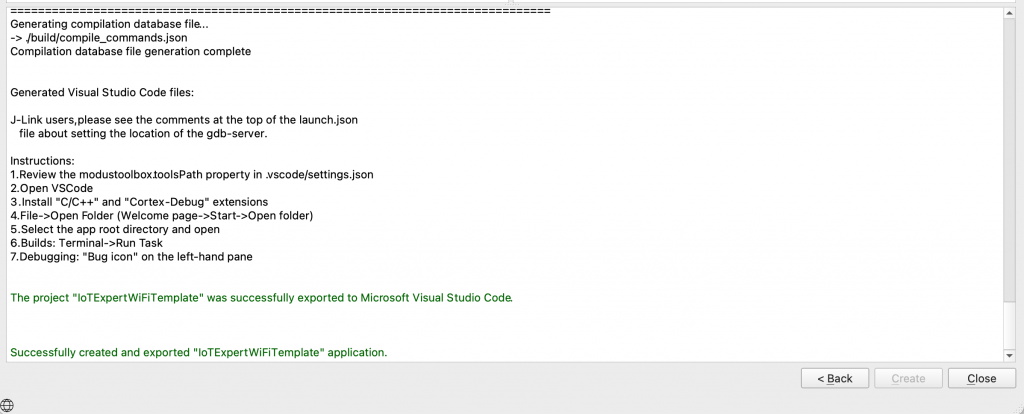

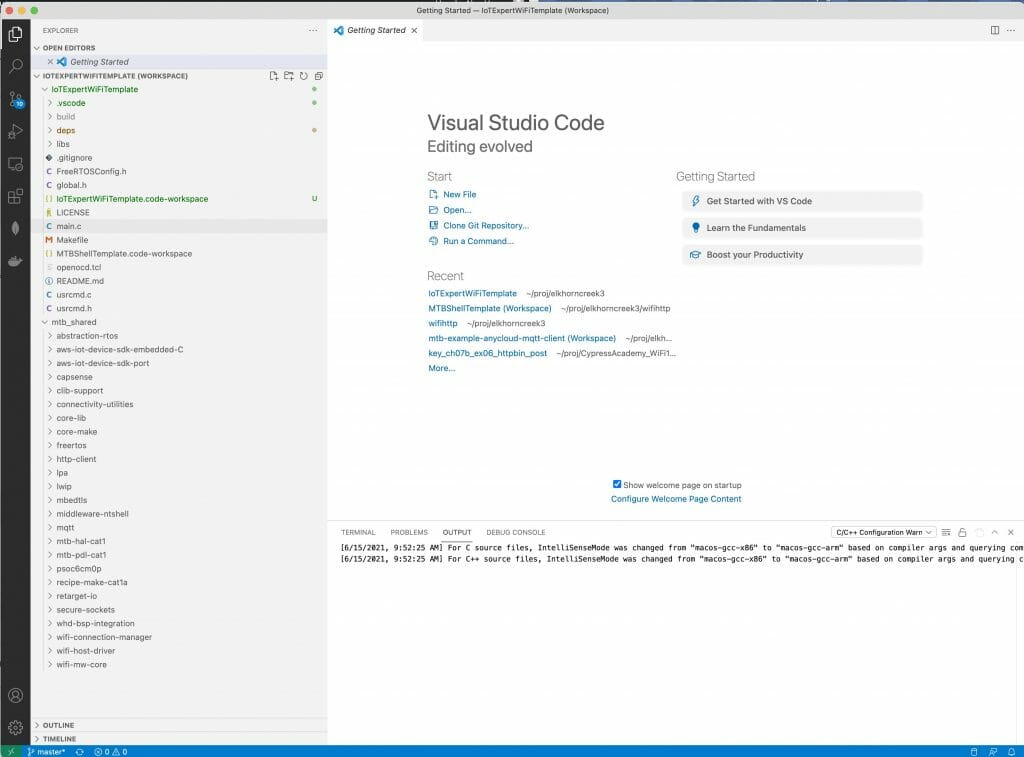

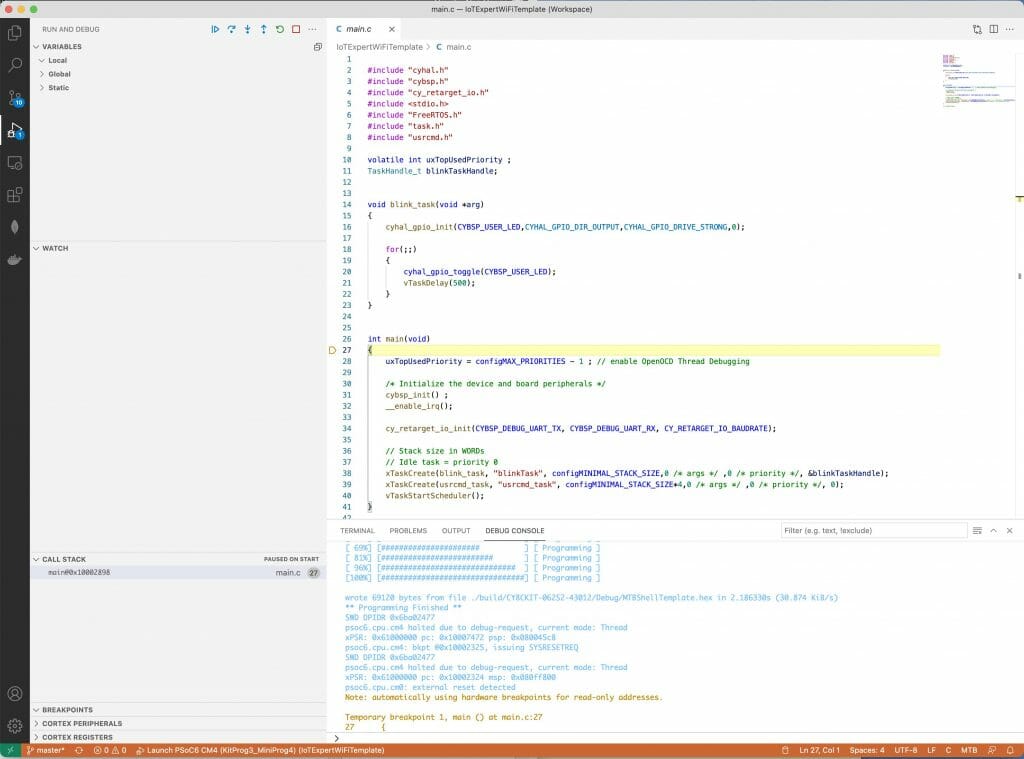

In the last article I got things going by starting from the old template, fixing up the Visual Studio Code configuration, adding the new Cypress Logging functionality and then testing everything.

In this article I will

- Create new task to manage WiFi

- Add Wireless Connection Manager to the project

- Create wifi_task.h and wifi_task.c

- Update usrcmd.c to send commands to the WiFi task

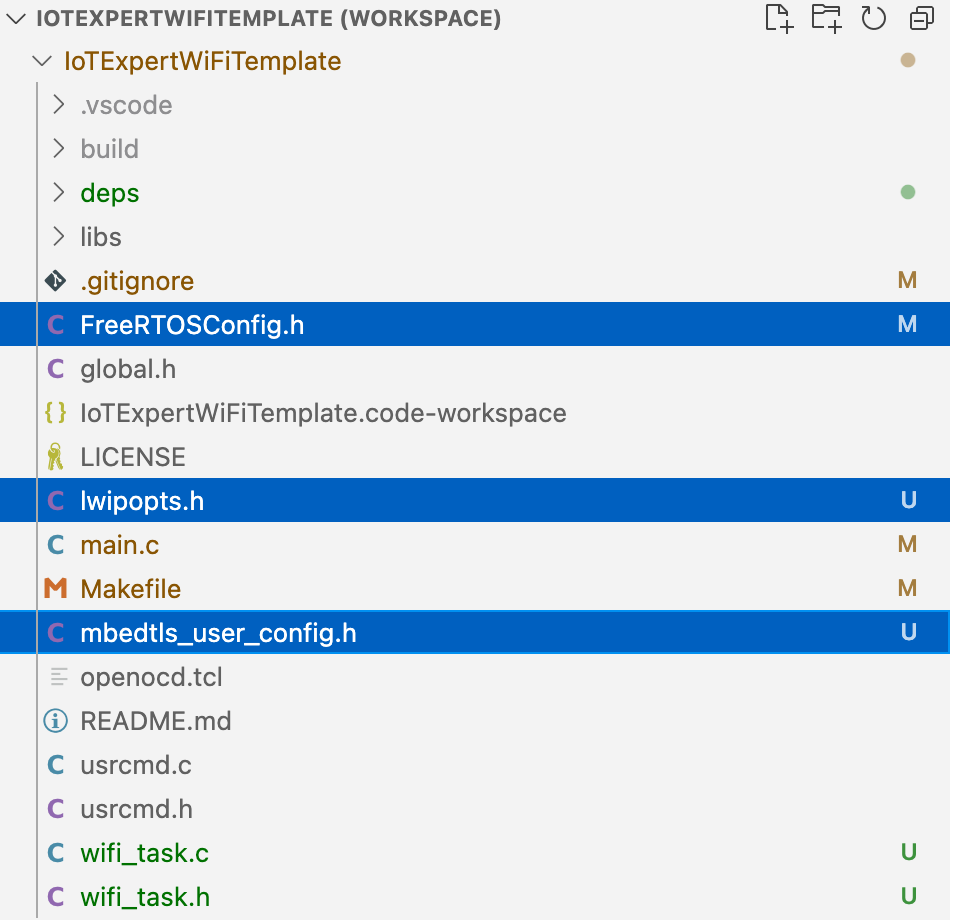

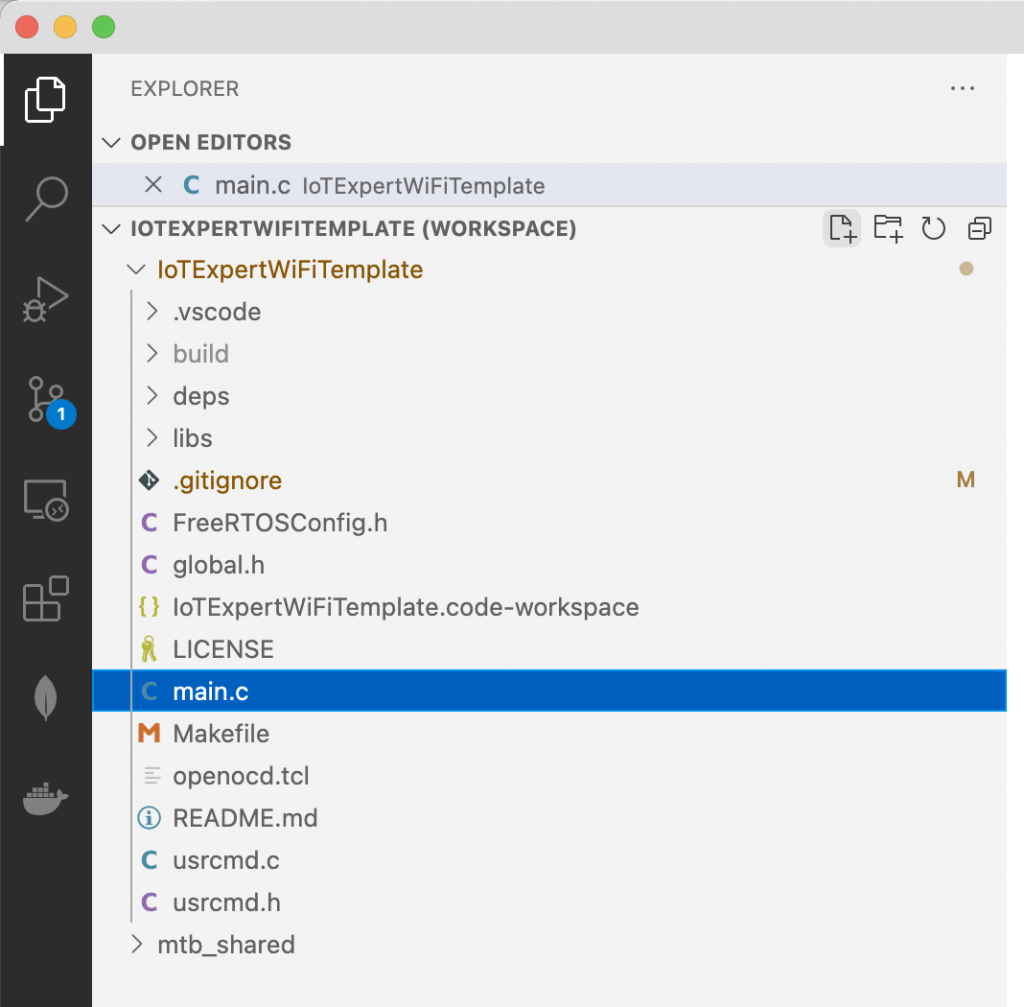

Create New Task to Manage WiFi

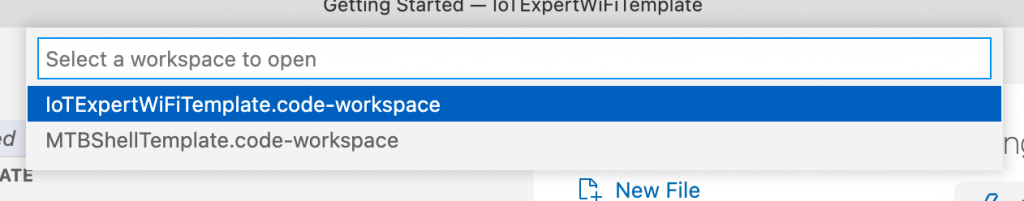

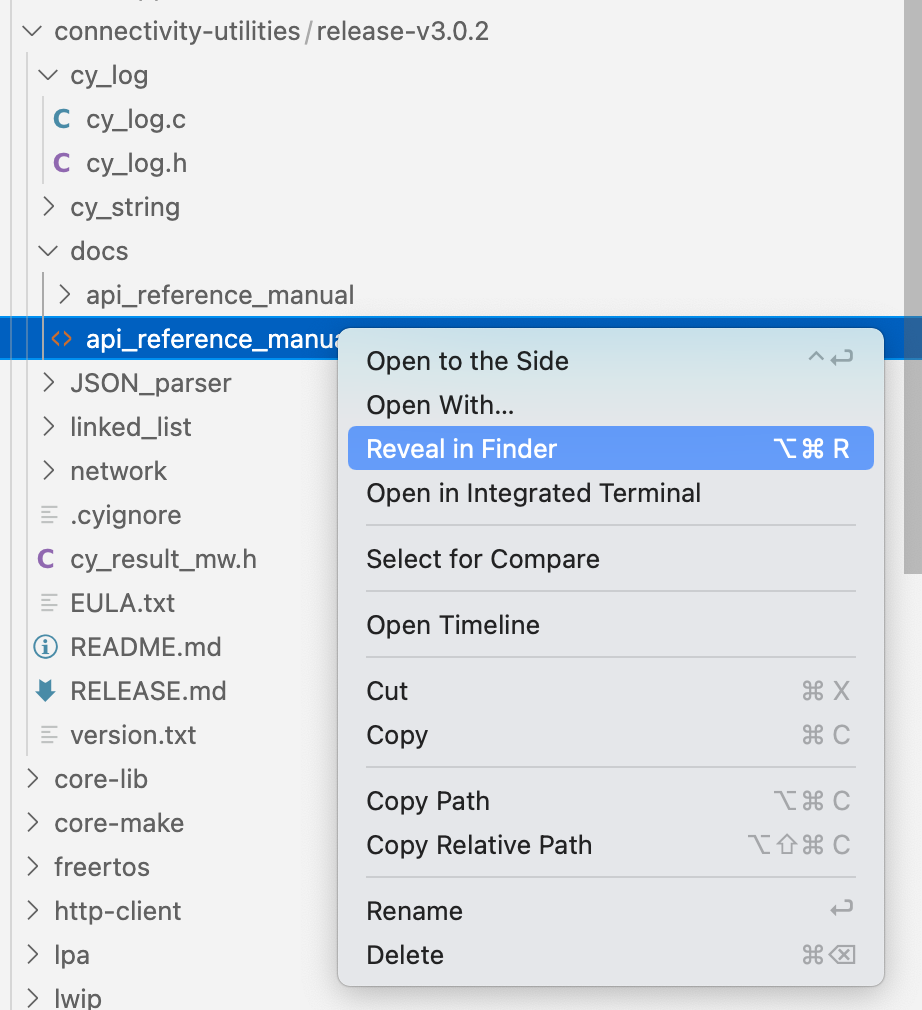

I am going to start by creating new a new task (called wifi_task) that will be responsible for managing the WiFi connection. In Visual Studio Code you can create a new file by pressing the little document with the + on it. You will need a file “wifi_task.h” and one “wifi_task.c”

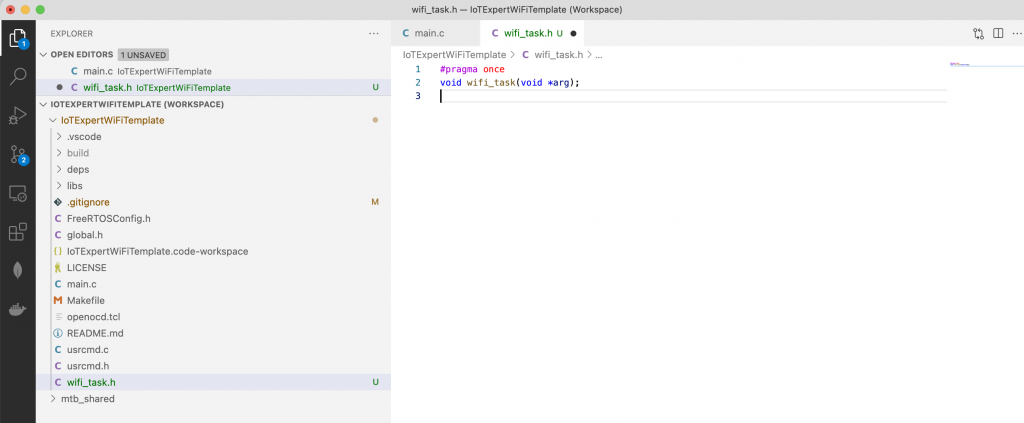

Once you have wifi_task.h you will need to add the function prototype for the wifi_task. In addition add a “guard”. I like to use “#pragma once”

Here is copyable code.

#pragma once void wifi_task(void *arg);

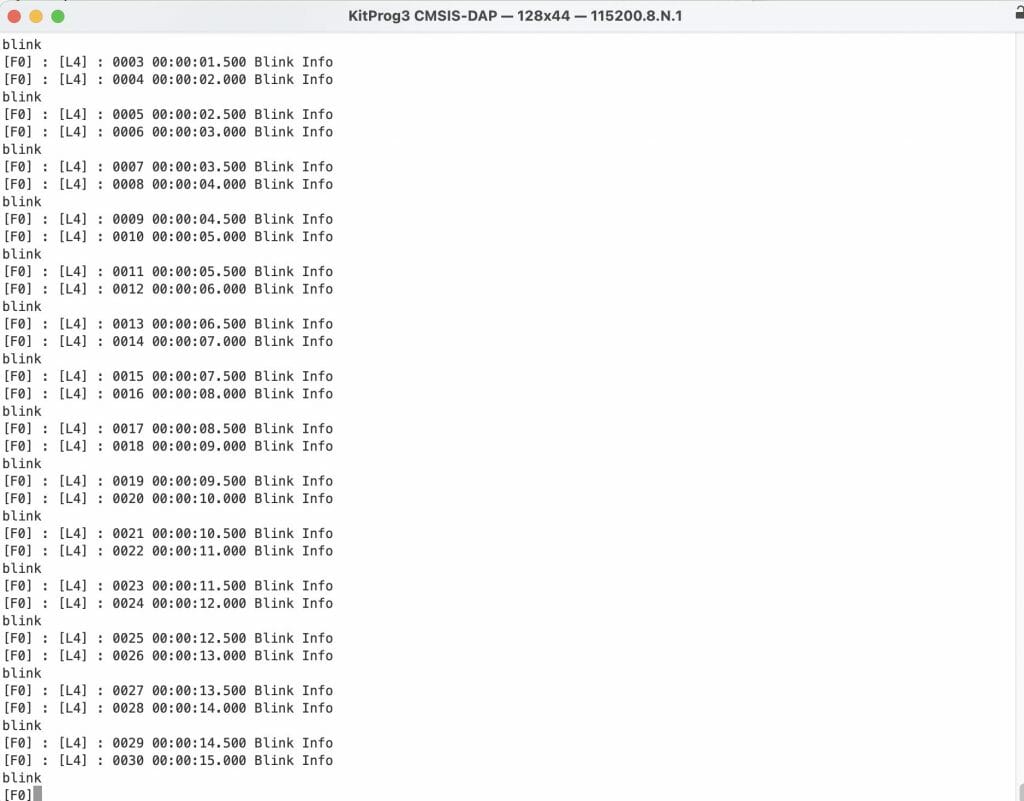

In wifi_task.c Ill start with a simple blinking LED function. Well actually it will do a print instead of a blink. Here it is:

#include "wifi_task.h"

#include "FreeRTOS.h"

#include "task.h"

#include <stdio.h>

void wifi_task(void *arg)

{

while(1)

{

vTaskDelay(1000);

printf("blink\n");

}

}

Now that I have a wifi_task (which doesn’t do much) lets update main.c. First include the wifi_task.h

#include "wifi_task.h"

Then create the task. Notice that I start with a pretty big stack.

xTaskCreate(wifi_task, "WiFi" , configMINIMAL_STACK_SIZE*20,0 /* args */ ,0 /* priority */, 0);

When you run this, you will have the “blink” interleaved with the blink from the last article.

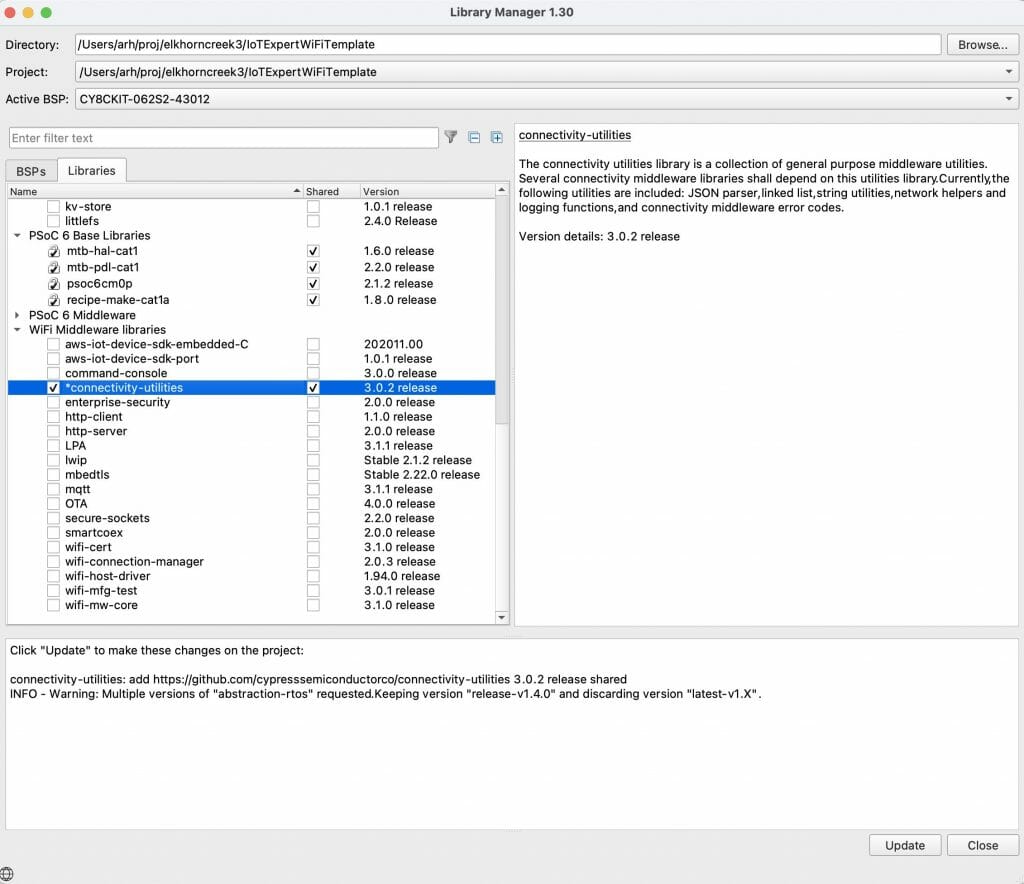

Add Wireless Connection Manager

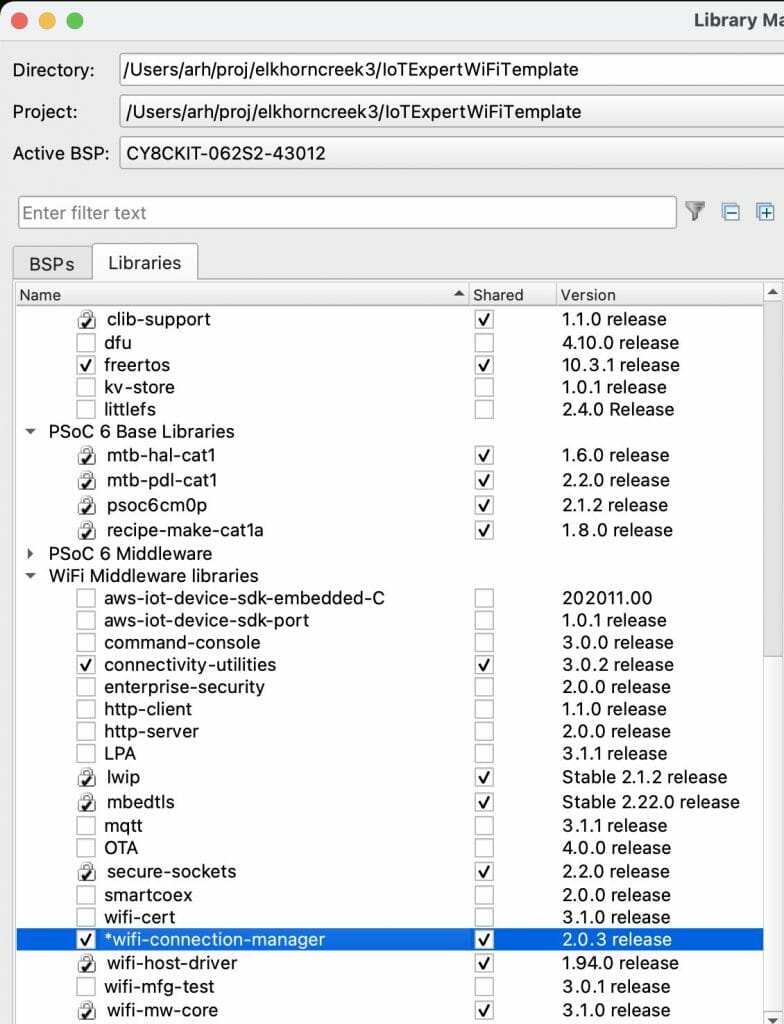

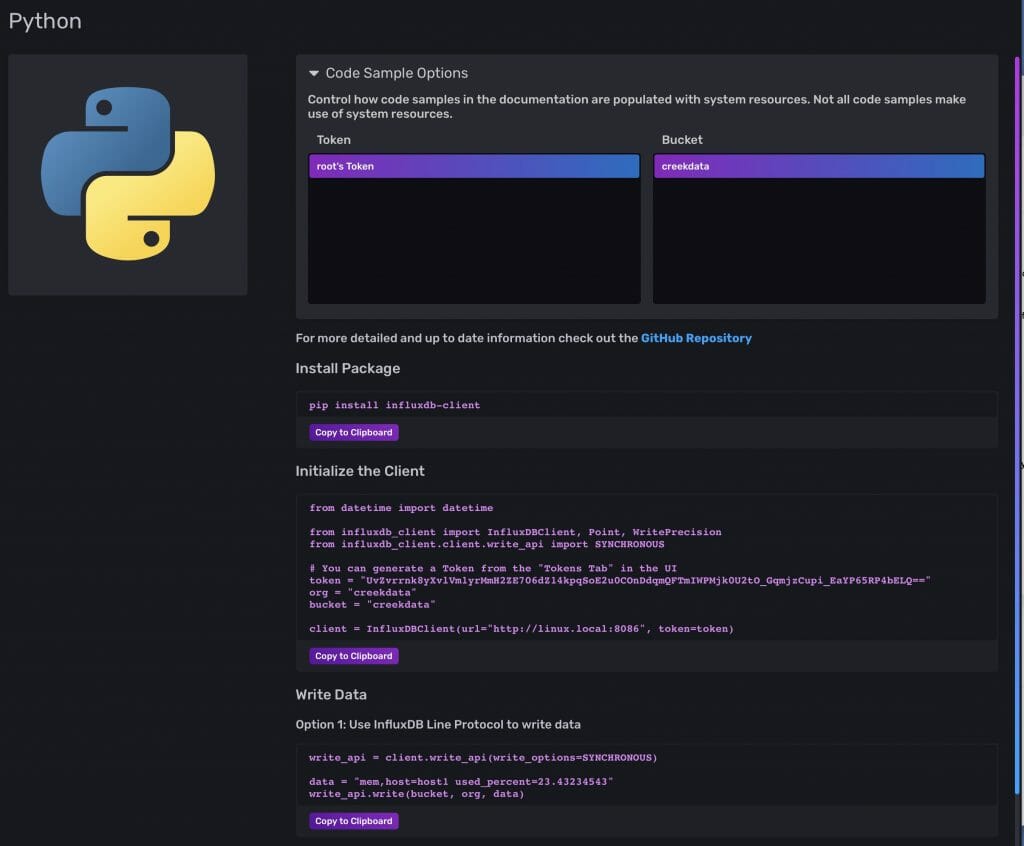

You next step is to add the wireless connection manager. Start the library browser by running “make modlibs”. Then click on the wifi-connection-manager”. Notice that when you do that, it will bring in a bunch of other libraries as well. These are all libraries that it (the WiFi-Connection-Manager) is depend on.

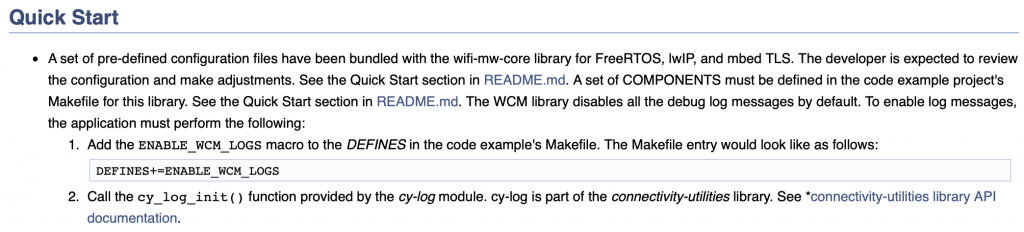

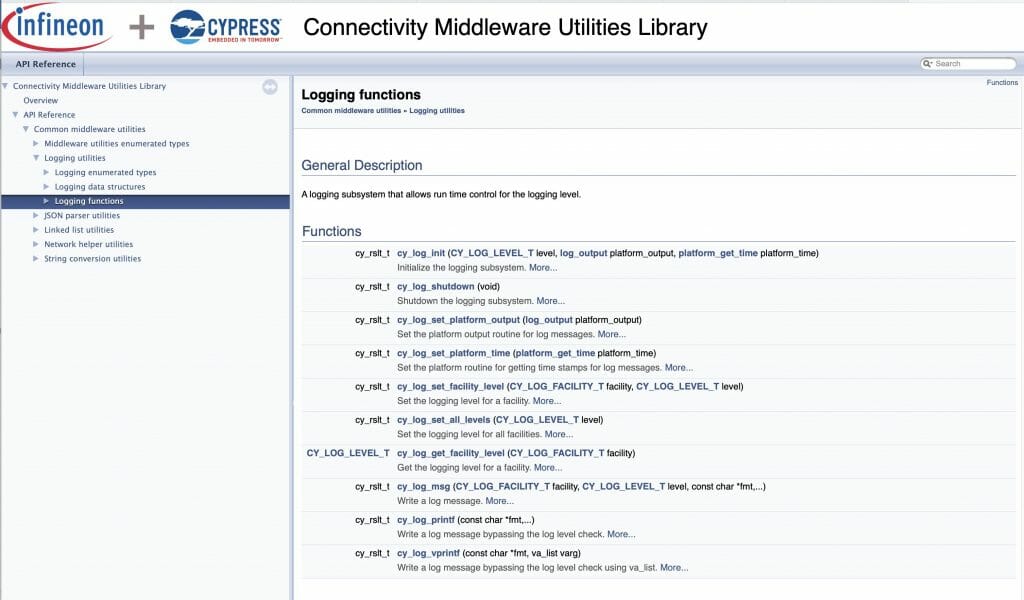

If you look in the wireless connection manager documentation you will find this nice note. It says that the WCM uses the Cypress logging functionality and you can turn it on with a #define. That’s cool. So now I edit the Makefile and add the define.

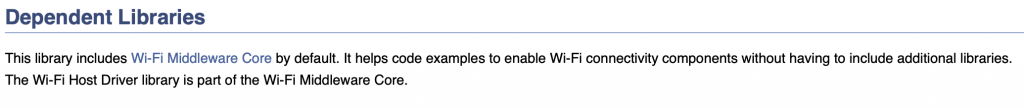

The the documentation also says that this library depends on the “Wi-Fi Middleware Core”

If you go to the Wi-Fi Middleware core library documentation you will see instructions that say that you need to

- Enable & Configure LWIP

- Enable & Configure MBEDTLS

- Enable & Configure the Cypress RTOS Abstraction

In order to do that you will need to two things

- Copy the configuration files into your project

- Setup some options in the Makefile

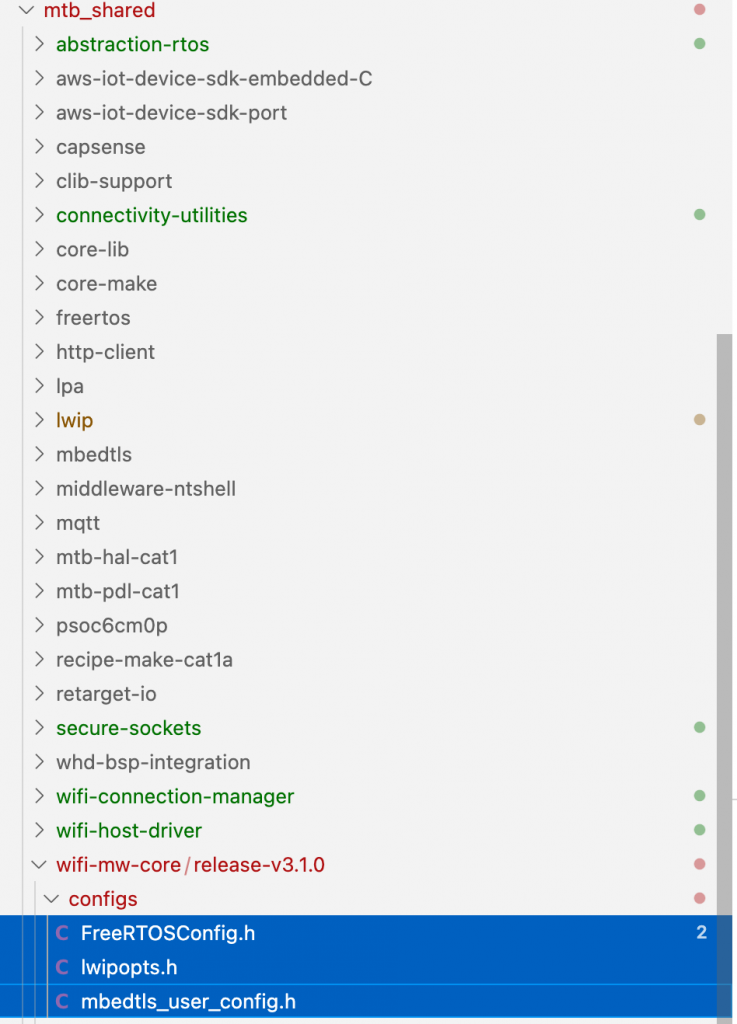

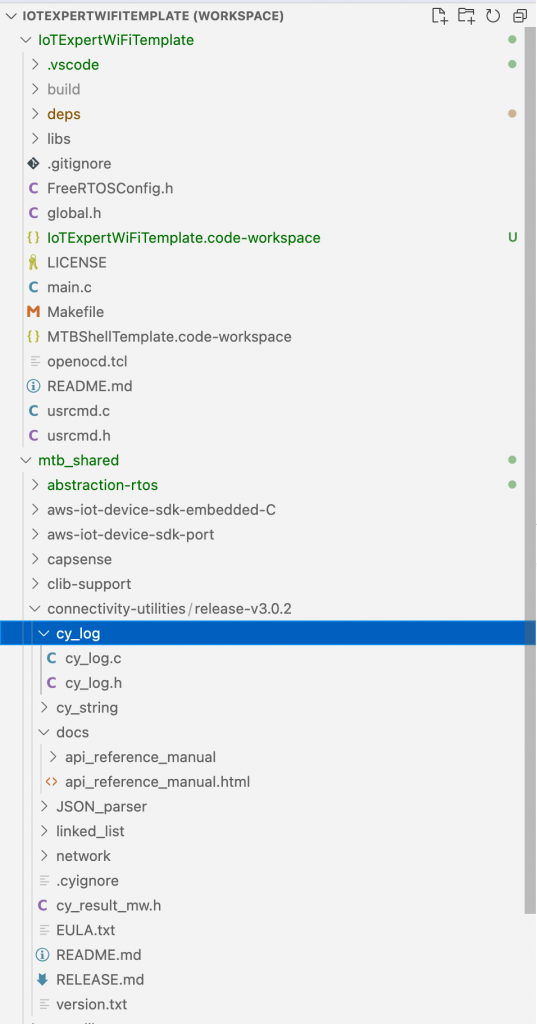

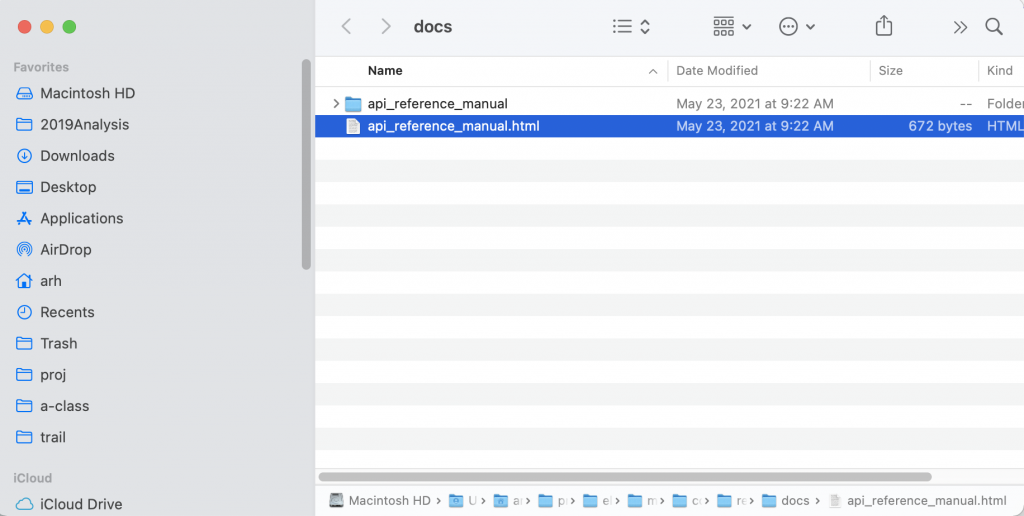

Start by copying the file. They give you default configurations in mtb_share/wifi-mw-core/version/configs. You will want to copy those files into your project. This can be done in the Visual Studio Code interface using ctrl-c and ctrl-v

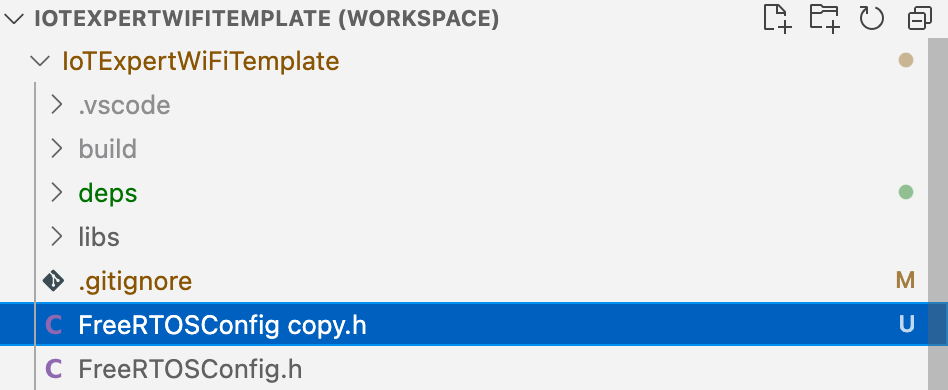

Notice that I now have two FreeRTOSConfig files. So, delete the original file and rename the copied file.

Now your project should look like this:

The next step is to fix the Makefile by adding some defines.

DEFINES=CY_RETARGET_IO_CONVERT_LF_TO_CRLF DEFINES+=CYBSP_WIFI_CAPABLE CY_RTOS_AWARE DEFINES+=MBEDTLS_USER_CONFIG_FILE='"mbedtls_user_config.h"' DEFINES+=ENABLE_WIFI_MIDDLEWARE_LOGS

The add the required components

COMPONENTS=FREERTOS LWIP MBEDTLS PSOC6HAL

Update wifi_task.c

My wifi task is going to work by

- Sitting on Queue waiting for messages of “wifi_cmd_t”

- When those messages come in, execute the right command.

Start by adding some includes to the wifi_task.c

#include <stdio.h> #include "wifi_task.h" #include "FreeRTOS.h" #include "task.h" #include "queue.h" #include "cy_wcm.h"

Then define the legal commands. I will add a bunch of more commands in the future articles. But for this article there will be only one command. Enable. The command message is

- The command

- Three args of unknown type

In addition you will require

- The command queue

- An initialize state variable

- A way to keep track of what mode you are in (AP, STA or APSTA)

typedef enum {

WIFI_CMD_ENABLE,

} wifi_cmd_t;

typedef struct {

wifi_cmd_t cmd;

void *arg0;

void *arg1;

void *arg2;

} wifi_cmdMsg_t;

static QueueHandle_t wifi_cmdQueue;

static bool wifi_initialized=false;

static cy_wcm_interface_t wifi_network_mode;

The first “command” that I will create is the enable. This will

- Setup the interface

- Initialize the WiFi. The simple init command actually does a bunch of stuff, including powering on the wifi chip, downloading the firmware into it, setting up all of the tasks in the RTOS, enabling the LWIP and MBEDTLS

static void wifi_enable(cy_wcm_interface_t interface)

{

cy_rslt_t result;

cy_wcm_config_t config = {.interface = interface};

result = cy_wcm_init(&config); // Initialize the connection manager

CY_ASSERT(result == CY_RSLT_SUCCESS);

result = cy_wcm_register_event_callback(wifi_network_event_cb);

CY_ASSERT(result == CY_RSLT_SUCCESS);

wifi_network_mode = interface;

wifi_initialized = true;

printf("\nWi-Fi Connection Manager initialized\n");

}

In the previous block of code notice that I register a callback. The callback looks like a switch that prints out messages based on the event type. Notice that there are three lines which are commented out – which we will fix in the next article.

static void wifi_network_event_cb(cy_wcm_event_t event, cy_wcm_event_data_t *event_data)

{

cy_wcm_ip_address_t ip_addr;

switch(event)

{

case CY_WCM_EVENT_CONNECTING: /**< STA connecting to an AP. */

printf("Connecting to AP ... \n");

break;

case CY_WCM_EVENT_CONNECTED: /**< STA connected to the AP. */

printf("Connected to AP and network is up !! \n");

break;

case CY_WCM_EVENT_CONNECT_FAILED: /**< STA connection to the AP failed. */

printf("Connection to AP Failed ! \n");

break;

case CY_WCM_EVENT_RECONNECTED: /**< STA reconnected to the AP. */

printf("Network is up again! \n");

break;

case CY_WCM_EVENT_DISCONNECTED: /**< STA disconnected from the AP. */

printf("Network is down! \n");

break;

case CY_WCM_EVENT_IP_CHANGED: /**< IP address change event. This event is notified after connection, re-connection, and IP address change due to DHCP renewal. */

cy_wcm_get_ip_addr(wifi_network_mode, &ip_addr, 1);

// printf("Station IP Address Changed: %s\n",wifi_ntoa(&ip_addr));

break;

case CY_WCM_EVENT_STA_JOINED_SOFTAP: /**< An STA device connected to SoftAP. */

// printf("STA Joined: %s\n",wifi_mac_to_string(event_data->sta_mac));

break;

case CY_WCM_EVENT_STA_LEFT_SOFTAP: /**< An STA device disconnected from SoftAP. */

// printf("STA Left: %s\n",wifi_mac_to_string(event_data->sta_mac));

break;

}

}

Now I want to update the main loop of the WiFI task. It is just an infinite loop that processes command messages (from other tasks).

void wifi_task(void *arg)

{

wifi_cmdQueue = xQueueCreate(10,sizeof(wifi_cmdMsg_t));

wifi_cmdMsg_t msg;

while(1)

{

xQueueReceive(wifi_cmdQueue,&msg,portMAX_DELAY);

switch(msg.cmd)

{

case WIFI_CMD_ENABLE:

printf("Received wifi enable message\n");

wifi_enable((cy_wcm_interface_t)msg.arg0);

break;

}

}

}

In the other tasks in the system you “COULD” create a message and submit it to the queue. I always think that it is easier if you create a function which can be called in the other threads. Here is the wifi_enable function. This function takes a char * of either “STA”, “AP”, or “APSTA” and then submits the right message to the queue.

bool wifi_cmd_enable(char *interface)

{

wifi_cmdMsg_t msg;

msg.cmd = WIFI_CMD_ENABLE;

msg.arg0 = (void *)CY_WCM_INTERFACE_TYPE_STA;

if(strcmp(interface,"STA") == 0)

msg.arg0 = (void *)CY_WCM_INTERFACE_TYPE_STA;

else if(strcmp(interface,"AP") == 0)

msg.arg0 = (void *)CY_WCM_INTERFACE_TYPE_AP;

else if(strcmp(interface,"APSTA") == 0)

msg.arg0 = (void *)CY_WCM_INTERFACE_TYPE_AP_STA;

else

{

printf("Legal options are STA, AP, APSTA\n");

return false;

}

xQueueSend(wifi_cmdQueue,&msg,0);

return true;

}

Once I have the nice function for the other tasks, I add it to the public interface in wifi_task.h

#pragma once #include <stdbool.h> void wifi_task(void *arg); bool wifi_cmd_enable(char *interface);

Add a new user command “net”

Now that I have the wifi_task setup I want to add a “net” command to the command line shell. Start by adding the include.

#include "wifi_task.h"

Then create a function prototype for a new command.

static int usrcmd_net(int argc, char **argv);

Add the command to the list of commands that the shell knows.

static const cmd_table_t cmdlist[] = {

{ "help", "This is a description text string for help command.", usrcmd_help },

{ "info", "This is a description text string for info command.", usrcmd_info },

{ "clear", "Clear the screen", usrcmd_clear },

{ "pargs","print the list of arguments", usrcmd_pargs},

#ifdef configUSE_TRACE_FACILITY

#if configUSE_STATS_FORMATTING_FUNCTIONS ==1

{ "tasks","print the list of RTOS Tasks", usrcmd_list},

#endif

#endif

{ "net","net [help,enable]",usrcmd_net},

};

Then create the net command. I want a BUNCH of net commands. They will include help, enable, connect, disconnect, …. but for now we will start with enable. This function just calls the wifi_enable command that we added to the wifi_task.h interface.

static int usrcmd_net(int argc, char **argv)

{

if(argc == 1 || strcmp("help",argv[1]) == 0)

{

printf("net [help,enable,connect,disconnect,mdns,scan,ping,lookup]\n");

printf("%-35s %s\n","net enable","Enable the WiFi Driver & load the WiFi Firmware");

return 0;

}

if(strcmp("enable",argv[1])==0)

{

if(argc == 2)

wifi_cmd_enable("STA");

else

wifi_cmd_enable(argv[2]);

return 0;

}

return 0;

}

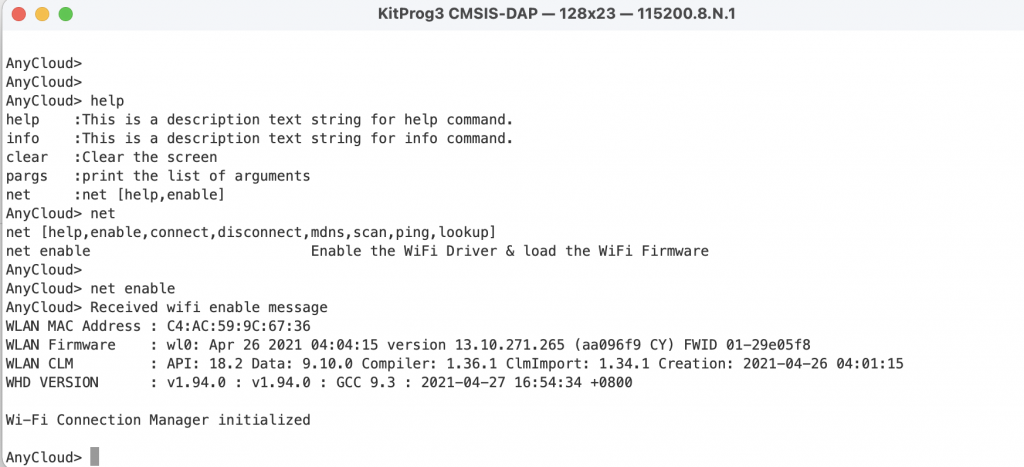

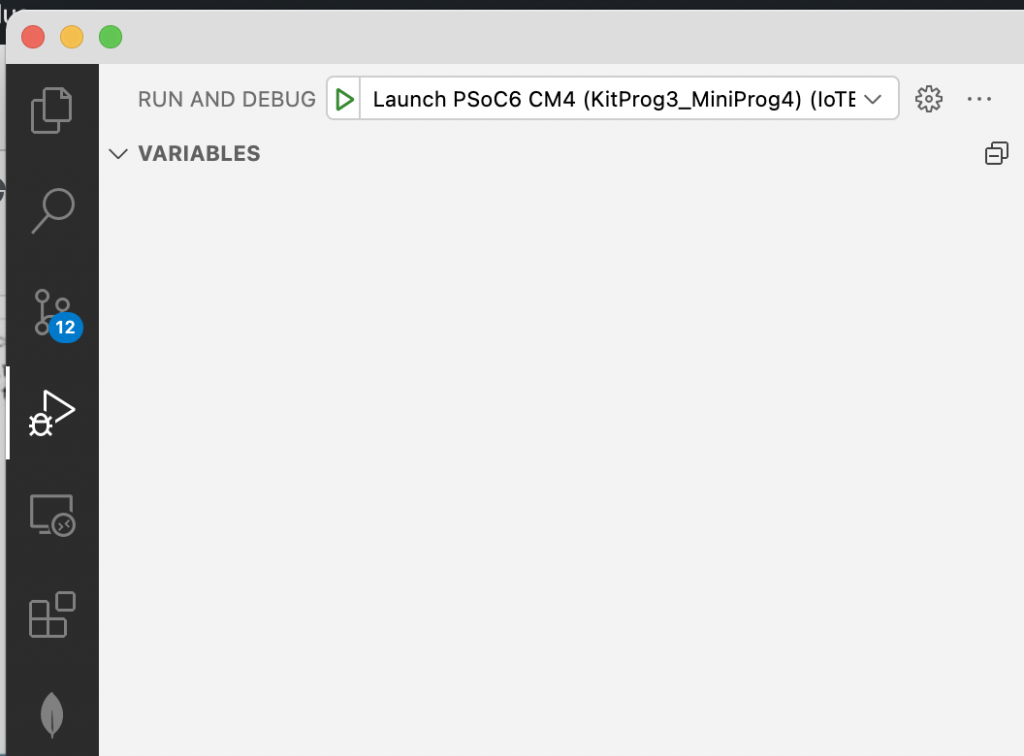

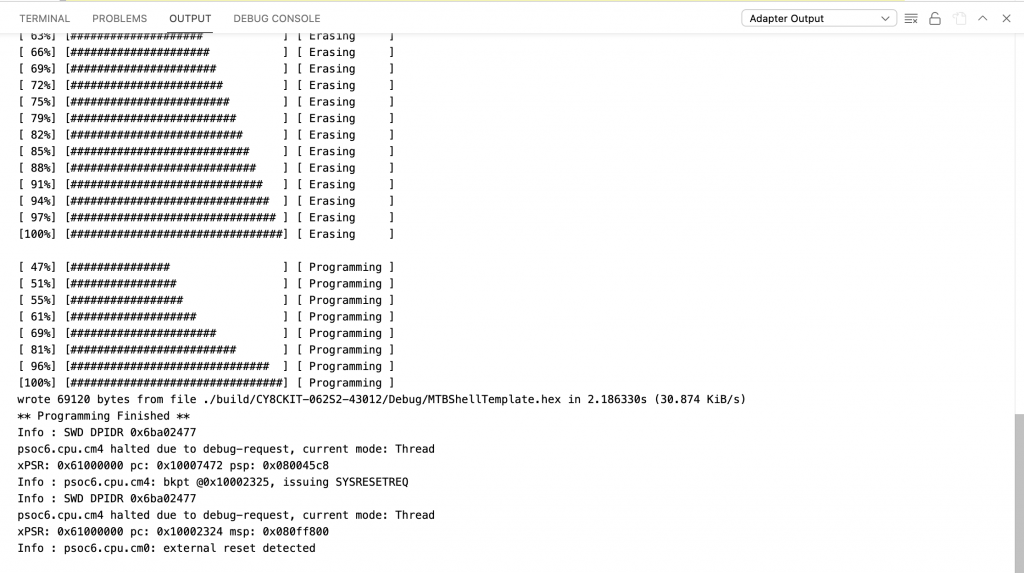

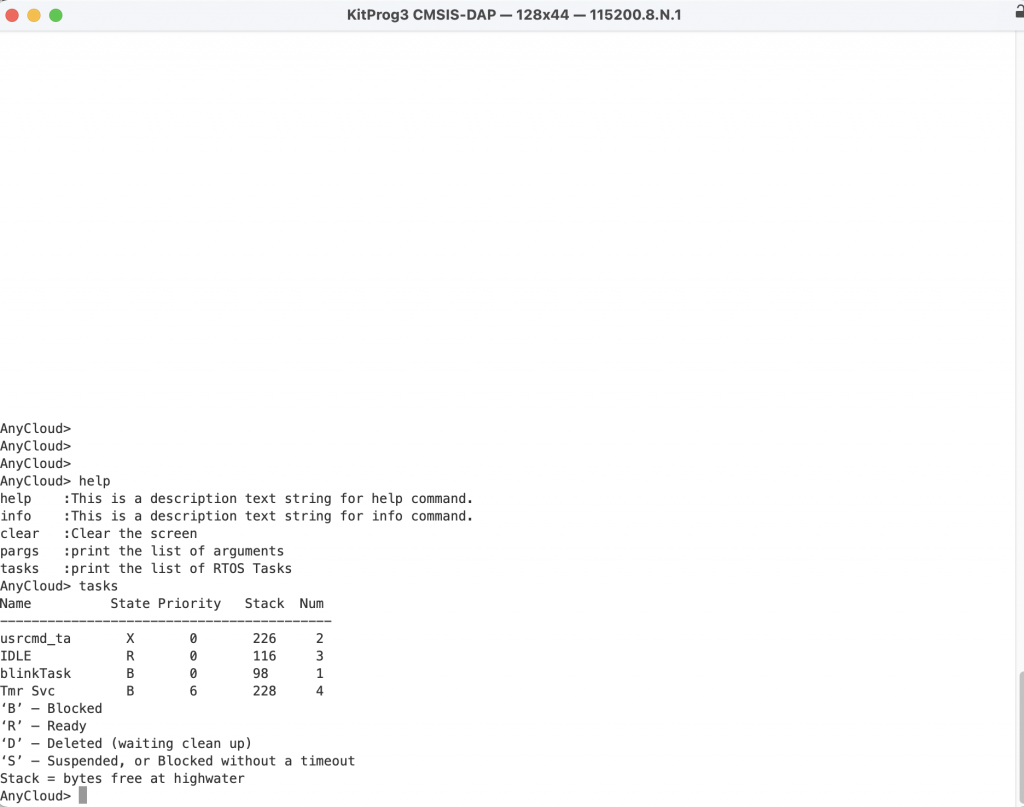

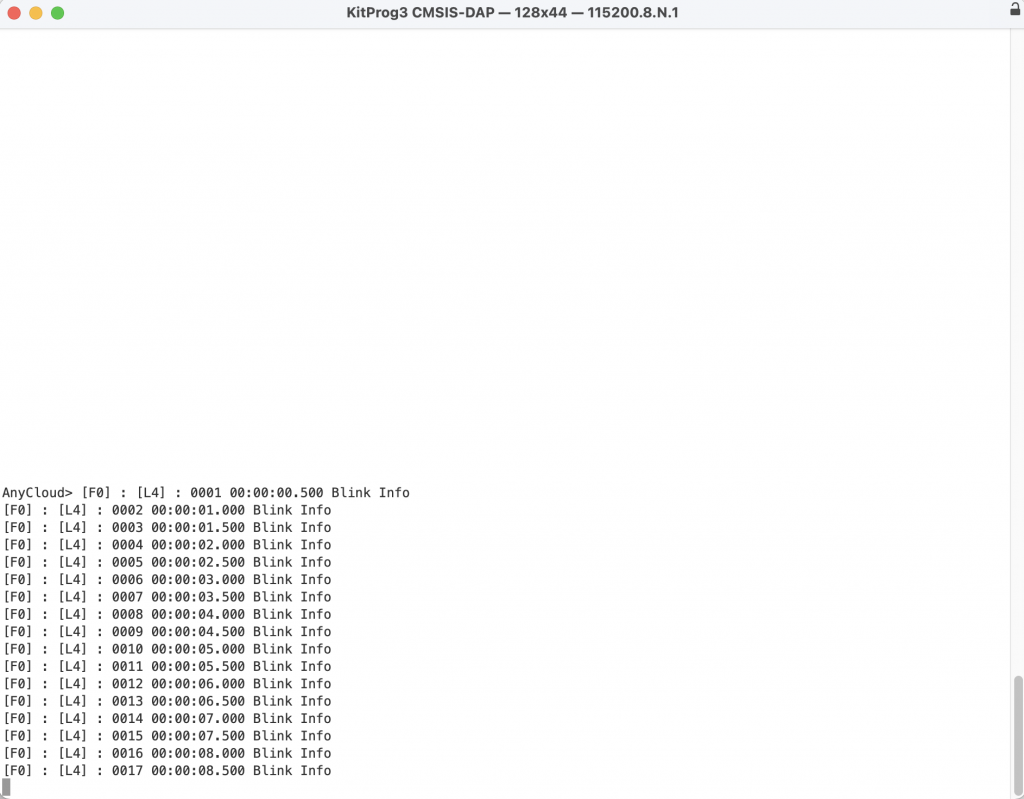

Test

Program and test. Now the “net enable” works. Notice that it gives you the output about the wifi firmware being loaded into the chip. Then it tells you that the chip is enabled and the connection manager is rolling.

In the next article I will create a new library of helper functions for wifi.